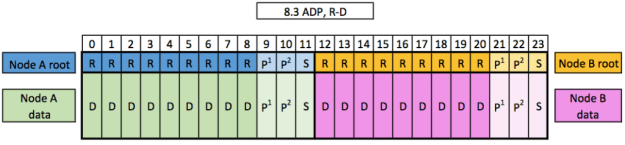

Ever since clustered Data ONTAP went mainstream over 7-Mode, the dedicated root aggregate tax has been a bone of contention for many, especially for those entry-level systems with internal drives. Can you imagine buying a brand new FAS2220 or FAS2520 and being told that not only are you going to lose two drives as spares, but also another six to your root aggregates? This effectively left you with four drives for your data aggregate, two of which would be devoted to parity. I don’t think so. Now, this is a bit of an extreme example that was seldom deployed. Hopefully you had a deployment engineer who cared about the end result and would use RAID-4 for the root aggregates and maybe not even assign a spare to one controller, giving you seven whole disks for your active-passive deployment. Still, this was kind of a shaft. In a 24-disk system deployed active-active, you’d likely get something like this:

Enter ADP.

In the first version of ADP introduced in version 8.3, clustered Data ONTAP gained the ability to partition drives on systems with internal drives as well as the first two shelves of drives on All Flash FAS systems. What this meant was the dedicated root aggregate tax got a little less painful. In this first version of ADP, clustered Data ONTAP carved each disk into two partitions: a small one for the root aggregates and a larger one for the data aggregate(s). This was referred to as root-data or R-D partitioning. The smaller partition’s size depended on how many drives existed. You could technically buy a system with fewer than 12 drives, but the ADP R-D minimum was eight drives. By default, both partitions on a disk were owned by the same controller, splitting overall disk ownership in half.

You could change this with some advanced command-line trickery to still build active-passive systems and gain two more drive partitions’ worth of data. Since you were likely only building one large aggregate on your system, you could also accomplish this in System Setup if you told it to create one large pool. This satisfied the masses for a while, but then those crafty engineers over at NetApp came up with something better.

Enter ADPv2.

Starting with ONTAP 9, not only did ONTAP get a name change (7-Mode hasn’t been an option since version 8.2.3), but it also gained ADPv2 which carves the aforementioned data partition in half, or R-D2 (Root-Data,Data) sharing for SSDs. Take note of the aforementioned SSDs there, as spinning disks aren’t eligible for this secondary partitioning. In this new version, you get one drive back that you would have allocated to be a spare, and you also get two of the parity drives back, lessening the pain of the RAID tax. With a minimum requirement of eight drives and a maximum of 48, here are the three main scenarios for this type of partitioning.

12 Drives:

24 Drives:

48 Drives:

As you can see, this is a far more efficient way of allocating your storage that yields up to ~17% more usable space on your precious SSDs.

So that’s ADP and ADPv2 in a nutshell—a change for the better. Interestingly enough, the ability to partition disks has lead to a radical change in the FlashPool world called “Storage Pools,” but that’s a topic for another day.